Quantum Boosting AI’s Performance

Artificial intelligence tries to predict future based on current information or to classify data. The heart of an AI is the model which is basically the brain of an AI. Models are the result of learning (aka training) like normal brains have to do. Your brain learns from the information in the form of books, experiences, videos… provided.

But different to brains, training a model needs two main ingredients:

- First you need some data. A lot of data. Either in the form of labeled data upfront and/or some labeled data in the form of a user feedback.

- Secondly, you need computing power. A lot of computing power.

GPT-3 by OpenAI

The maybe most prominent example of an AI is GPT-3 of OpenAI. GPT-3 can be used for text generation, for image generation or can extend existing images by “outpainting”. But before all this was possible, GPT-3 had to be trained.

On Springboard you can find next to some technical details also the amount of data that was used. 45 TB of data from sources like the books, web, Wikipedia, reddit…

As per the creators, the OpenAI GPT-3 model has been trained about 45 TB text data from multiple sources which include Wikipedia and books.

https://www.springboard.com/blog/data-science/machine-learning-gpt-3-open-ai/

And the second requirement is the necessary computing power. As Microsoft explains it on its blog, for training the model 285,000 CPU cores and 10,000 GPUs were used. 😱

The supercomputer developed for OpenAI is a single system with more than 285,000 CPU cores, 10,000 GPUs and 400 gigabits per second of network connectivity for each GPU server.

https://blogs.microsoft.com/ai/openai-azure-supercomputer/

Quantum computer?

Quantum computing is the latest kid on the block and gives hope, that it can add a major boost. Frank Zickert has an excellent book about quantum machine learning (QML) with Python and also a blog worth to follow. Also Brian Lenahan is not getting tired to explain the possibilities of AI and quantum computing (check out both of his book).

I have found two papers about how quantum computing can impact artificial intelligence.

Fewer training data

The first paper is Generalization in quantum machine learning from few training data (authors are by Matthias C. Caro, Hsin-Yuan Huang, M. Cerezo, Kunal Sharma, Andrew Sornborger, Lukasz Cincio and Patrick J. Coles). This paper shows, that training an QML is not as data hungry as expected. So “[…] some studies have found that, exponentially in n, the number of qubits, large amounts of training data would be needed, assuming that one is trying to train an arbitrary unitary.”, but as Patrick Coles says, that the need for training data is not as bad as expected.

“The need for large data sets could have been a roadblock to quantum AI, but our work removes this roadblock. While other issues for quantum AI could still exist, at least now we know that the size of the data set is not an issue,” said Patrick Coles.

https://discover.lanl.gov/news/0823-quantum-ai

This indicates that adding more and more qubits does not make training more data hungry.

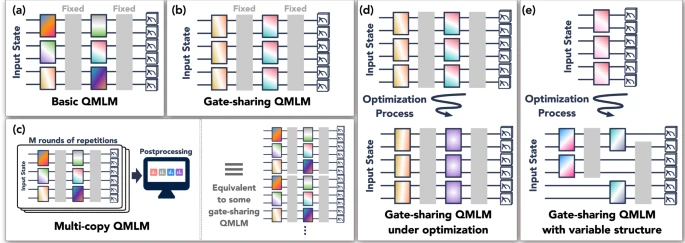

QNN – CNN on quantum steroids

Convolutional neural networks, or short CNN, is the current standard for image analysis and is using matrix operations to process pixel data. A quanvolutional neural network (QNN) is like a CNN, but instead to mapping the data to bits of 0 and 1, the data is mapped to qubits and quantum states.

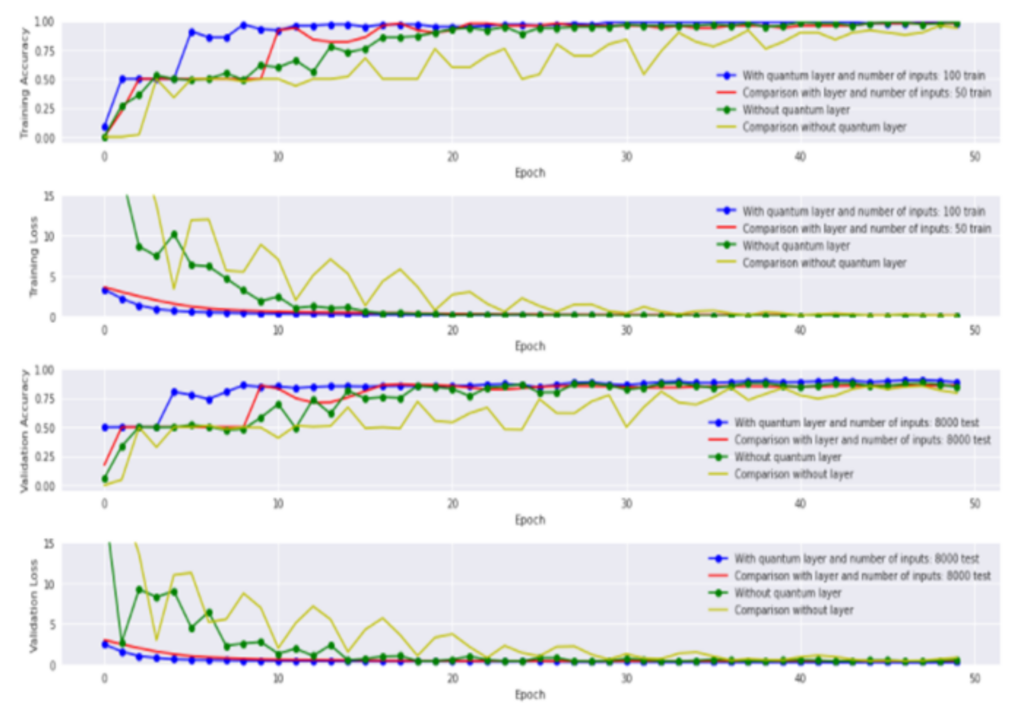

The second paper Predict better with less training data using a QNN demonstrate how quantum computers can be used in computer vision and “[…]quantum convolutions into classical CNNs.” And as this is not a purely theoretical paper, it is using Pennylane to show empirical results.

In the scenario considered, our QNN model requires less training data to achieve better predictions than classical CNNs. In other words, we observe a genuine quantum advantage for a real world application scenario.

But interesting is the sentence following, which hints that the way of encoding has big impact.

This advantage is not so much due to quantum speedup but rather due to superior quantum encoding of small data sets.

And which gives hope that quantum computing improves the need for data and even with less data, a higher accuracy is achieved. The results indicate, that QNN has high potential compared to a classical CNN.

However, we gained a lot of evidence to merit further investigation of how QML models can be fit on much less data than classical models can.

Final thoughts

Quantum computing is known for its threat to the internet (Shor’s algorithm), a opportunity to the internet (quantum secure communication) or for sensing cavities in the earth (https://spectrum.ieee.org/quantum-sensors-gravity-birmingham). Maybe less known, but maybe the most promising area is improving artificial intelligence by improving the computing power or reducing the data needed. At least these two papers demonstrated that better or equal results are achievable with even less data.