Moore’s Law vs Rose’s Law vs Neven’s Law

First the question is What makes a law a law? A law as discussed here is not like a law in physics or a law defined by legal, a set of rules enforced by government. Laws as discussed here are rather theories and hypotheses that try to explain the future based on observations in the past. To become a law, not just thoughts or ideas are sufficient, real data is necessary. Based on data, a formula can be derived which can predict the future at least near term. And this post covers three laws from IT, that tries to explain the future of available computing power.

Moore’s Law

Gordon Moore, founder of Intel, predicted in 1965 a doubling of components on integrated circuits every two years. Since integrated circuits were pretty new at this time, this statement was not based on empirical data, but still the predicted forecast held pretty well and is now known as Moore’s Law.

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year. Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years.

https://newsroom.intel.com/wp-content/uploads/sites/11/2018/05/moores-law-electronics.pdf

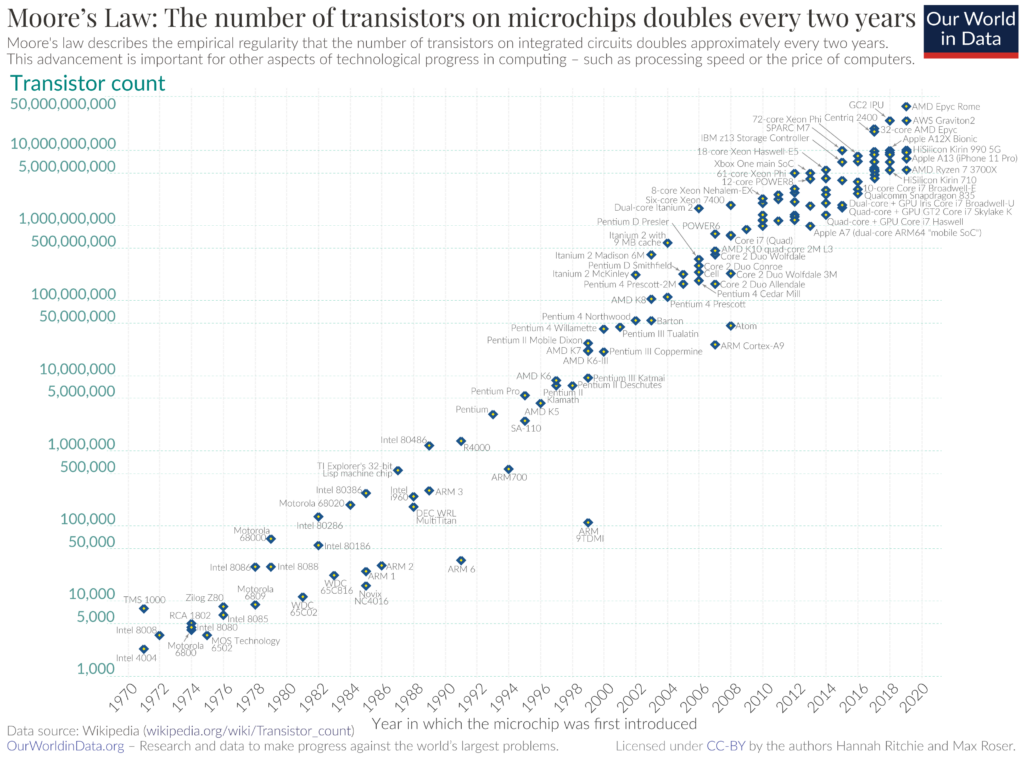

And almost for 50 years, Moore’s Law still holds its predictions of the future. On wikipedia a list of CPUs with the corresponding number of transistors can be found (visualized below). It starts with an Intel 4004 (checkout the video of Robert Noyce in The Man behind the Microchip) which had 2,250 transistors and the leader of the list is an Apple M1 Ultra with a whooping 114,000,000,000. Improvements in the manufacturing are obvious: Intel 4004 was produced with 10,000nm and the latest Apple with 5nm, which brought the density from 188 transistors/mm2 to 135,600,000 transistors/mm2.

And as a comparison between the Intel 4004 and the Apple M1 Ultra demonstrates, it not only an improvement of the manufacturing process. Semiconductor manufacturers did everything to increase the power of CPUs, like adding more and more cores onto one chip (Apple M1 Ultra has 2×10 cores). So approximately every two years we get new CPUs that are smaller, faster, and more energy efficient.

Rose’s Law

Rose’s Law is named after Geordie Rose, now founder and CEO at Sanctuary.ai, during his time as CTO of D-Wave. D-Wave is a now well-known big player in quantum technology and as you can guess, Rose’s Law is not related to the classical CPUs, it is all about predicting the growth for quantum computers (or QPU). Compared to classical CPUs, the quantum computers work with qubits instead of bits.

And similar to Moore’s Law, the growth of number of qubits was expected to double every two years and the comparison of D-Waves systems could be used of proof. A roadmap of IBM shows that this law was even too pessimistic.

Sound’s good except that you cannot simply compare D-Wave’s and IBM’s system. Both use qubits, both are quantum computers, but both are different. D-Wave is using annealing approach whereas IBM is circuit based. Saying that D-Wave is better than IBM is like saying that oranges are better thaen apples.

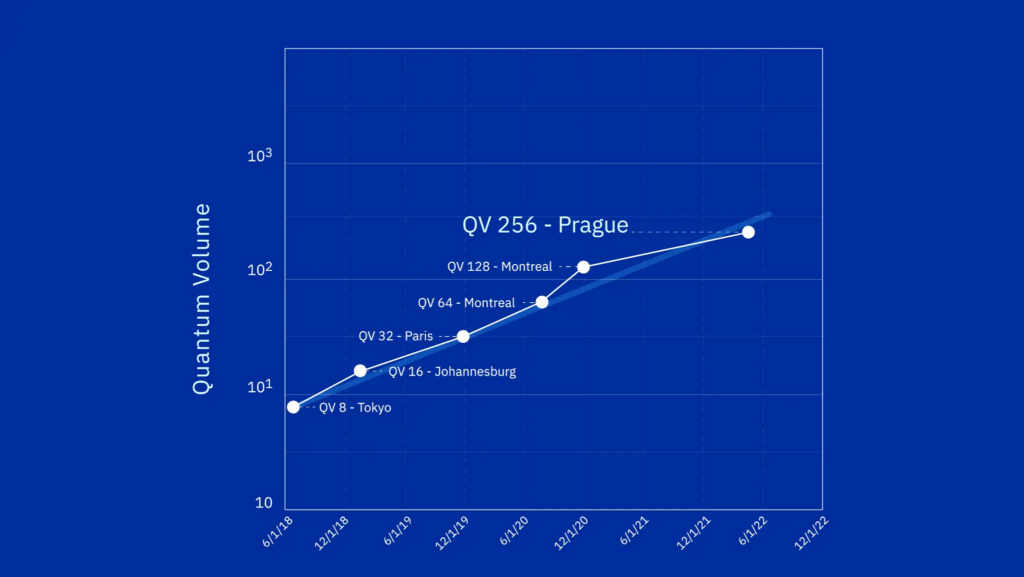

And to solve this problem, different approaches are used. Scientists try to find a metric which can be used for all types of systems. On has been defined by IBM itself and is called quantum volume which considers the number of qubits, the depth of a possible circuit, fidelity, the error rate,… of the system and reduces it to one single metric, which is supposed to double every year. Quantum volume itself was superseded by CLOPS, a metric that describes Circuit Layer Operations Per Second (similar to the FLOPS or OPS for classical computers).

Another benchmark is SupermarQ which is described in the paper https://arxiv.org/abs/2202.11045 which attempts to provide better benchmark to compare quantum systems.

However, the field of quantum computing is still very young and benchmarking quantum computers is even younger. A widely accepted benchmark for quantum computer is still to come.

Neven’s Law

Hartmut Neven, director of engineering at Google, proposed in 2018 a law the forecasts a ‘doubly exponential’ growth compared to classical architectures. This means that the power of a quantum computer is expected to grow by the powers of powers of 2, e.g. by 2^(2^1)=4, 2^(2^2)=2^4 =16, 2^(2^3)=2^8 =256,… compared to normal computers. There are two explanations for the assumed ‘doubly exponential’ growth:

- Qubits can be in superposition and entangled and this allows quantum computers with e.g., 3 qubits to have the same computing power as a classical computer with 8 bits. Or with 4 qubits you would need 16 bits.

- Another factor are the fast improvements of qubits. Number of qubits available on one chip are rising (see chart above) and also the error rates, connectivity… is improved.

This law is very new and concrete data is still missing. And not everyone is completely convinced that Neven’s Law is really a law or some are even cautious. And the same as for Rose’s Law, the question is if the bare number of qubits is really sufficient to compare performance.

Maybe it is just an observation, but maybe it is the new law to describe the rise of quantum computing?

Final thoughts

Moore’s Law has proven track record for many years now, Rose’s Law and Neven’s Law are the new kids on the block for the new quantum computing area. But it will be fascinating to see how the field of quantum computing evolves and what laws will be derived by the observations. Quantum computing won’t be become boring soon.